YouTube to Stop Recommending Conspiracy Videos (Again)

Almost a year ago, CEO Susan Wojcicki said YouTube would tweak its recommendation engine to push more appropriate results. But as BuzzFeed found recently, that didn't really happen.

YouTube is again claiming it'll tweak its recommendation system to promote fewer conspiracy videos.

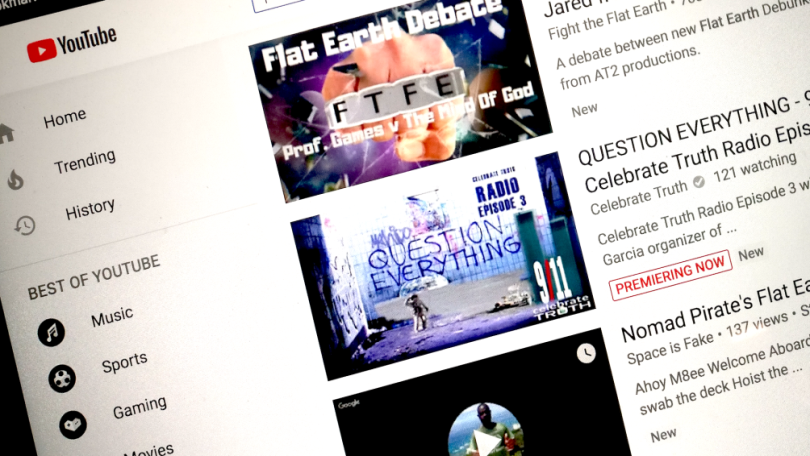

The Google-owned service announced the change after BuzzFeed published a report detailing how YouTube's recommendation systems can lead you down a "rabbit hole" of misinformation and politically charged content.

"We'll begin reducing recommendations of borderline content and content that could misinform users in harmful ways—such as videos promoting a phony miracle cure for a serious illness, claiming the Earth is flat, or making blatantly false claims about historic events like 9/11," the company said in a Friday blog post.

What will be different this time isn't clear. Almost a year ago, YouTube's CEO Susan Wojcicki said the video- sharing service was going to tweak its recommendation engine to push more appropriate results. At the time, she specifically cited the need for YouTube to promote a "diversity of content" and "authoritative sources" when it comes to videos regarding news and politics.

But on Thursday, BuzzFeed ran a report showing the different ways YouTube's recommendation engine can serve up content from fringe sources. For example, a video about the US Congress can eventually lead a user to encounter an anti-immigrant clip from a conservative think tank that's been accused of promoting hate.

The same algorithms will also push conspiracy videos when watchers are looking up information on current events. In another example from BuzzFeed's report, a "nancy pelosi speech" search on YouTube can trigger the recommendation system to eventually serve up content examining former President George Bush's funeral for clues relating to the QAnon conspiracy.

Going forward, YouTube said it's going to reduce the amount of conspiracy videos that its recommendation algorithms push to viewers. However, the company is refraining from a complete recommendation ban.

"When relevant, these videos may appear in recommendations for channel subscribers and in search results," the YouTube team said. "We think this change strikes a balance between maintaining a platform for free speech and living up to our responsibility to users."

YouTube will start rolling out the changes in the US before expanding globally. But the video service made no mention on how it'll tackle all the political content on the platform, which YouTube's recommendation engine also likes to push. Critics claim the same system risks radicalizing viewers and dividing America by feeding people a near-endless streams of content from pundits on the far-right or far-left.

In its defense, YouTube is emphasizing the positive side of its recommendation engine. "When recommendations are at their best, they help users find a new song to fall in love with, discover their next favorite creator, or learn that great paella recipe," the YouTube team said in the blog post. "That's why we update our recommendations system all the time —we want to make sure we're suggesting videos that people actually want to watch."

Any video and music can be downloaded by the application.